Compatibility – Most of the emerging big data tools can be easily integrated with Hadoop like Spark. They use Hadoop as a storage platform and work as its processing system. Hadoop Deployment Methods 1. Standalone Mode – It is the default mode of configuration of Hadoop. It doesn’t use hdfs instead, it uses a local file system for both.

- Java.io.IOException: Could not locate executable null bin winutils.exe in the Hadoop binaries. Spark Eclipse on windows 7 asked Jul 10, 2019 in Big Data Hadoop & Spark by Aarav ( 11.5k points) apache-spark.

- Java.io.IOException: Could not locate executable null bin winutils.exe in the Hadoop binaries.This issue is often caused by a missing winutils.exe file that Spark needs in order to initialize the Hive context, which in turn depends onHadoop, which requires native libraries on Windows to work properly. Unfortunately, this happens even if you are.

Apache Spark is becoming very popular among organizations looking to leverage its fast, in-memory computing capability for big-data processing. This article is for beginners to get started with Spark Setup on Eclipse/Scala IDE and getting familiar with Spark terminologies in general –

Hope you have read the previous article on RDD basics, to get a basic understanding of Spark RDD.

Tools Used :

- Scala IDE for Eclipse – Download the latest version of Scala IDE from here. Here, I used Scala IDE 4.7.0 Release, which support both Scala and Java

- Scala Version – 2.11 ( make sure scala compiler is set to this version as well)

- Spark Version 2.2 ( provided in maven dependency)

- Java Version 1.8

- Maven Version 3.3.9 ( Embedded in Eclipse)

- winutils.exe

For running in Windows environment , you need hadoop binaries in windows format. winutils provides that and we need to set hadoop.home.dir system property to bin path inside which winutils.exe is present. You can download winutils.exehere and place at path like this – c:/hadoop/bin/winutils.exe . Read this for more information.

Creating a Sample Application in Eclipse –

In Scala IDE, create a new Maven Project –

Replace POM.XML as below –

POM.XML

For creating a Java WordCount program, create a new Java Class and copy the code below –

Java Code for WordCount

import java.util.Arrays;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaPairRDD;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import scala.Tuple2;

public class JavaWordCount {

public static void main(String[] args) throws Exception {

String inputFile = “src/main/resources/input.txt”;

//To set HADOOP_HOME.

System.setProperty(“hadoop.home.dir”, “c://hadoop//”);

//Initialize Spark Context

JavaSparkContext sc = new JavaSparkContext(new SparkConf().setAppName(“wordCount”).setMaster(“local[4]”));

Winutils.exe Hadoop 2.7

// Load data from Input File.

JavaRDD<String> input = sc.textFile(inputFile);

// Split up into words.

JavaPairRDD<String, Integer> counts = input.flatMap(line -> Arrays.asList(line.split(” “)).iterator())

.mapToPair(word -> new Tuple2<>(word, 1)).reduceByKey((a, b) -> a + b);

System.out.println(counts.collect());

sc.stop();

sc.close();

}

}

Scala Version

For running the Scala version of WordCount program in scala, create a new Scala Object and use the code below –

You may need to set project as scala project to run this, and make sure scala compiler version matches Scala version in your Spark dependency, by setting in build path –

import org.apache.spark.SparkConf

import org.apache.spark.SparkContext

object ScalaWordCount {

def main(args: Array[String]) {

//To set HADOOP_HOME.

System.setProperty(“hadoop.home.dir”, “c://hadoop//”);

// create Spark context with Spark configuration

val sc = new SparkContext(new SparkConf().setAppName(“Spark WordCount”).setMaster(“local[4]”))

//Load inputFile

val inputFile = sc.textFile(“src/main/resources/input.txt”)

val counts = inputFile.flatMap(line => line.split(” “)).map(word => (word, 1)).reduceByKey((a, b) => a + b)

counts.foreach(println)

sc.stop()

}

}

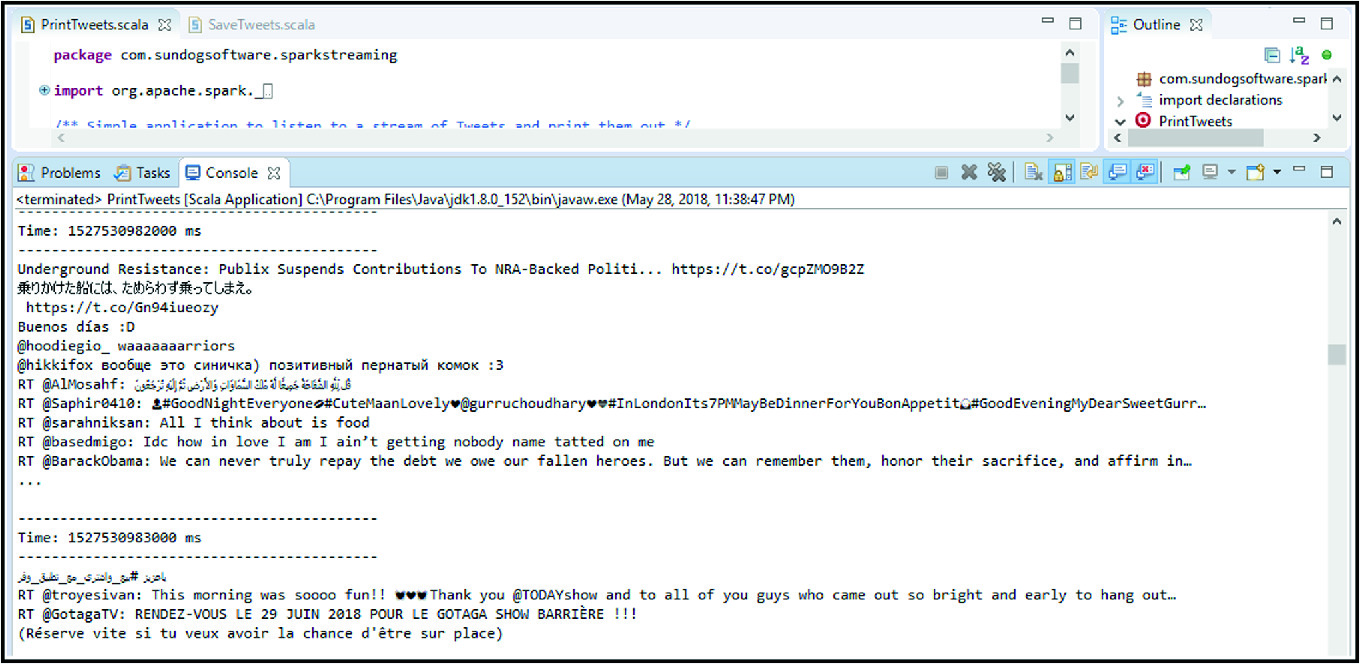

So, your final setup will look like this –

Running the code in Eclipse

You can run the above code in Scala or Java as simple Run As Scala or Java Application in eclipse to see the output.

Winutils.exe In The Hadoop Binaries

Output

Now you should be able to see the word count output, along with log lines generated using default Spark log4j properties.

In the next post, I will explain how you can open Spark WebUI and look at various stages, tasks on Spark code execution internally.

You may also be interested in some other BigData posts –

- Spark ; How to Run Spark Applications on Windows

If we directly take the binary distribution of Apache Hadoop 2.2.0 release and try to run it on Microsoft Windows, then we'll encounter ERROR util.Shell: Failed to locate the winutils binary in the hadoop binary path.In the previous post - Build, Install, Configure and Run Apache Hadoop 2.2.0 in Microsoft Windows OS, I have already described how to build Windows distribution of Apache Hadoop 2.2.0. But if you are feeling little bit lazy to perform all the lengthy steps described there and want to get started with Hadoop quickly by-passing those steps, then this is the post worth looking into.

Tools and Technologies used in this article :

Problem

Command Prompt

Why?

The binary distribution of Apache Hadoop 2.2.0 release does not contain some windows native components (like winutils.exe, hadoop.dll etc). These are required (not optional) to run Hadoop on Windows.

Quick Solution

For this post only, I have separately built hadoop-common-project to generate all the required native components. (Assuming you are using default Apache Hadoop 2.2.0 binary distribution) just download and copy all the files of hadoop-common-2.2.0/bin folder and paste them to /bin folder. And Enjoy!!!

Note : It is highly recommended to build Hadoop Windows binary distribution of your own.

Download SrcCodes

Prebuilt hadoop-common-2.2.0/bin is available on GitHub.

References